In the current environment where data is king, making sure that your data is consolidated and validated on a regular basis is an important part of the day, just like that first cup of coffee. Once the consolidation and validation has finished, usually something happens with that data. It could be that reports or spreadsheets are generated, notifications for both valid data and data exceptions are sent out, or employees use the data to continue a business process.

As with anything in IT, there are many ways to do this and most of them would actually work. However, not every project is a nail that you can use your trusty familiar hammer to finish off.

Here is the situation…..

Company ‘A’ is growing by acquiring and merging with its competitors from different regions. Over the past 5 years, it has acquired 4 separate companies each with its own IT infrastructure and each with its own degree of sophistication. Since there is no single customer system in use, there is a need to consolidate the data from the 4 acquired companies, into your system of record database on a regular basis.

During this integration, the dissimilar data formats need to be transformed into a common record layout, data needs to be validated, a check for duplicates needs to be performed, and the data needs to be inserted into the system of record.

After all of the integrations are complete a number of post processing steps need to be completed:

- Customer service of each subsidiary needs to be notified of any invalid customer data

- The corporate office needs to be notified of new customers for each region so they can be sent a “welcome package”

- Customer Analytics needs to be updated at corporate

- Billing needs to perform credit checks on new customers

Need a Bigger Hammer…..

Looking at the requirements, I can see that there is a large quantity of data integration and transformation, followed by several types of reporting and then finally an employee notification to start tasks following a standardized business process.

For the first part, we should use a tool that is good at extraction, transformation and data loading (ETL). In this example, I will be using SQL Server Integration Services (SSIS). After the data is extracted, transformed, validated and integrated we need to inform a set of users that they have work to do. Better yet we can start an RPA Bot to do the tedious work for them so they can stay focused on work that needs a human touch and reasoning. If you want to read more about RPA , this Apex Blog article gives a good explanation and example of Robotic Process Automation.

What is SSIS…..

SQL Server Integration services (SSIS) is a Microsoft ETL application that will allow you to build multi-level processes to handle anything data related. In this example, I will just be scratching the surface of its capabilities, but it should give you a good indication of the possibilities. This is NOT a full build out of a SSIS package and is not production ready. You can read more about SSIS here.

Lets get started …..

Taking a logical look at the process that we need to create, I see these major steps:

- Create the empty output files to report on the results (successes and failures)

- Integrate and Validate from subsidiary B from an Excel File

- Integrate and Validate from subsidiary C from a Web Service Call

- Integrate and Validate from subsidiary D from an Oracle DB

- Integrate and Validate from subsidiary E from a Text File

- Notify Customer Service department for each subsidiary of invalid data for that region

- Initiate welcome package label generation

- Notify the Analytics group about new customers

- Perform Credit checks on New Customers

- Clean up files from over 30 days ago

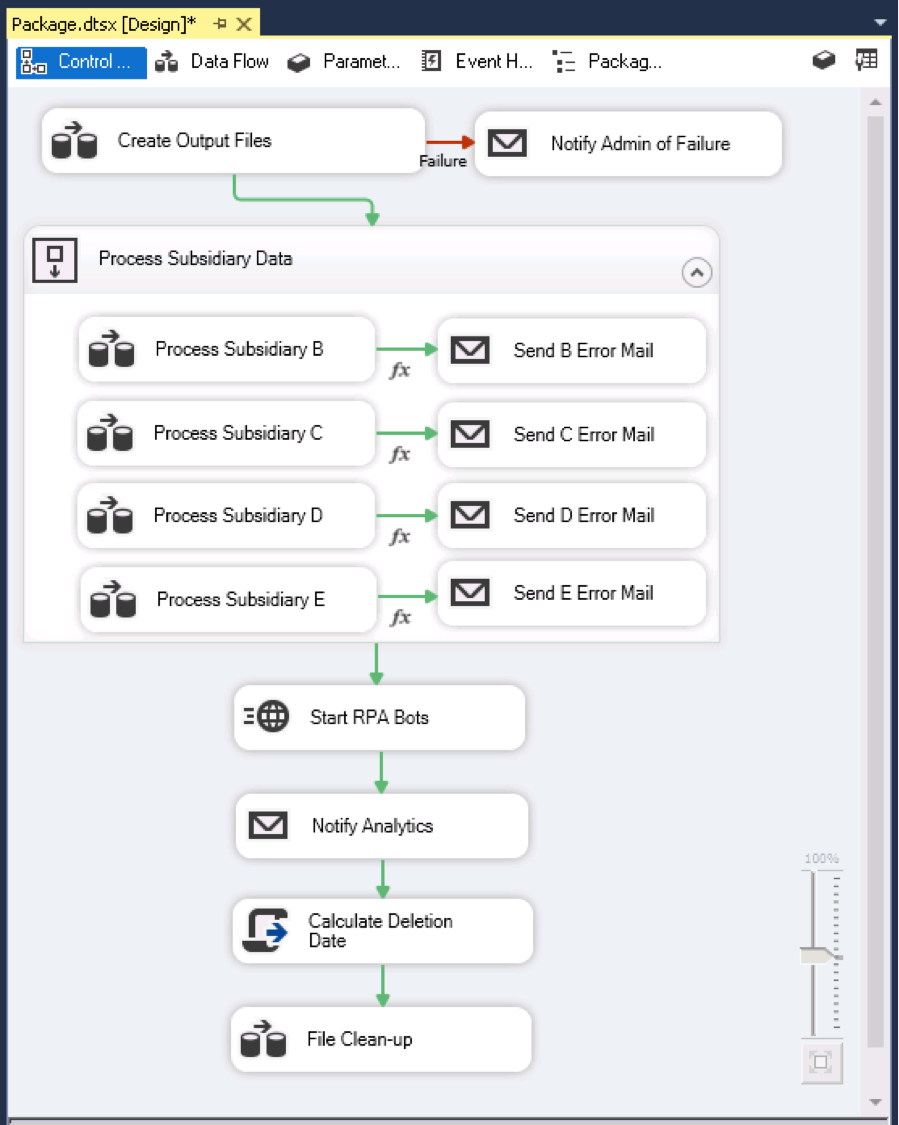

Creating my top-level package, it would lay it out to look like the following

The first data flow (Create Output Files) is a collection of file system tasks to create an empty exception file for each subsidiary and a new customer file to be used later in the process. If the creation of any of the files fails, the package will send an email to operations informing them of the failure.

After the Output File Creation task finishes, the flow then moves to a sequence container (Process Subsidiary Data) that contains a separate data flow for each subsidiary. I have not linked these four tasks into a series, as the order in which they execute does not matter and if the bandwidth exists to execute all 4 in parallel, I would be more then happy to let that happen.

Each subsidiary processing task is connected to a Send Error Email task as shown in the diagram. Looking closley you can see that the two tasks are connected by a precedence constraint, using a function, that only allows the email to be sent if there are any data validation errors that have been logged.

The Devil is in the details …..

The pattern inside of each subsidiary data flow task is the same. The steps needed for each subsidiary are:

- Connect and extract the data from the data source

- Transform and validate the data

- Check for the existence of the customer in the system of record

- Add the new customer to the system of record

- Add the new customer to the new customer spreadsheet

The data flow for each subsidiary would look like this:

The only differences for each subsidiary are the source of the data connection that is used, the transformation that is required from each subsidiary structure to the corporate database structure and the base filename to hold the exceptions.

Lets make a connection …..

The key to this pattern is setting up the data connection for each of the different subsidiary data sources. Luckly SSIS has the tools to help us do just that. A connection manager defines a connection from a variety of sources to the data that you can then use in your flow diagrams. They can be set-up so it is available by all of the components of the entire SSIS package or localized to an individual data flow. The included connectors include:

- SQL Server

- Excel

- Flat Text File

- Oracle

- ADO Net

- Change Data Capture (CDC)

- ODBC

- OLE DB

- Raw File

- XML

Let the Bots begin …..

Looking back at the top level flow diagram, you can see that after the completion of the data flow task for each of the four subsidiaries, the RPA Bots are started.

We could have made separate calls to each Bot, however instead of having SSIS make calls, I am calling a controller bot that will kick off the worker Bots.

These Bots will perform the following automated tasks using the new customer excel spreadsheet that was generated in the subsidiary processing step:

- Log into credit reporting websites for each new client and grab the credit rating related information and update the customer database

- Log into the shipping application and generate a shipping label for each new client allowing for faster welcome package shipping

While this is only a few tasks that could be automated, the RPA technology could be used to automate a wide variety of “swivel chair” tasks.

Let the people start too …..

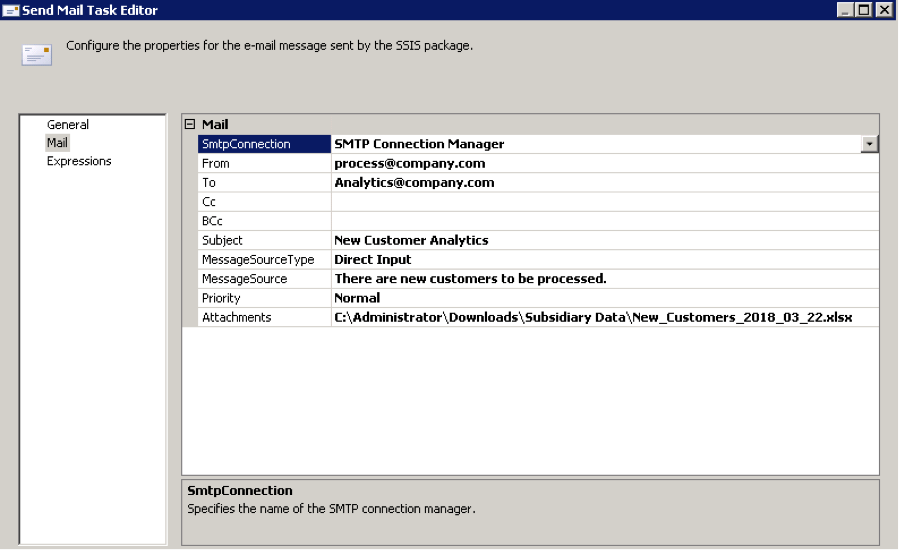

The next step on the upper level flow will send an email to the Analytics group with the newly added customers in an excel attachment that was created during the subsidiary processing . This will allow them to manually perform the research on the analytics for each new customer. The research for this is so dynamic that creating an RPA Bot, while possible, would not be cost effective.

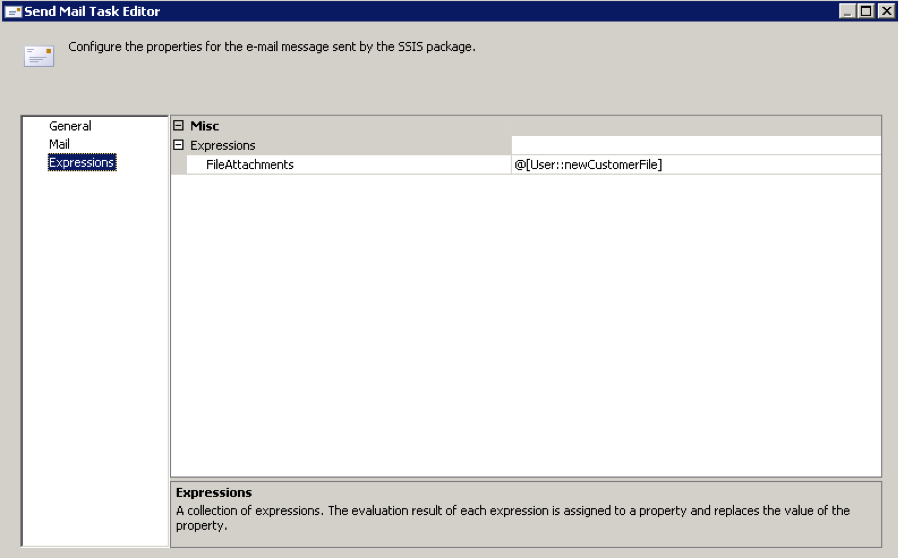

In the Notify Analytics task, we use a variable for the filename that is attached to the email. The variable is created at the package level and populated in the first task (Create Output Files). Specifying an expression will load the value of the variable into the parameter for sending the email at runtime. During development, it will place the variable default value in parameter.

Wrap it up …..

The last two steps in the top-level flow are used to clean up old files. The first one is a script task that calculates a filename with the embedded date from 30 days ago accounting for short months and leap years. This variable is then used in the last step to go back out into the file system and delete the files for this calculated date.

This SSIS Package can then be scheduled to run on a schedule (daily, weekly, hourly, etc.) to ensure a timely and consistent integration and handling of new customers.

Final thoughts …..

With the ability to combine technologies, tedious error-prone human tasks can be automated to improve the efficiency of most processes. While not a complete solution, hopefully this demonstrates that thinking outside of the box, can make a business run better.

We at Apex Process Consultants are experts at business process improvement and our experience in many areas allows us to combine technologies in the most effective ways to help you.