Siri isn’t HAL, but that’s okay, right?

Few aspects of information technology have been as massively misunderstood as Artificial Intelligence. Before getting into the discussion, let's take a minute and talk about what AI is and what it isn’t.

The history of Artificial Intelligence is not lengthy, but it is complicated. We could start arbitrarily with Claude Shannon’s paper on Information Theory which demonstrated that digital computers could solve logic problems using Boolean algebra. Or we could go back to Ada Lovelace’s letters to Charles Babbage where she postulated that through abstraction his mechanical contrivance could be used to solve problems mechanically. Arbitrary moments, but both are significant. You could read about Vannevar Bush, Alan Turing, Marvin Minsky, John McCarthy, and many others and still not have a full picture of how AI has developed.

The more poetic way to describe the development of AI would be to say it was built on a foundation of speculative promises, delivered on very few of them, yet has accomplished many amazing things that weren’t mentioned in the brochure. The disconnect between what has actually transpired in AI and what was promised is significant because disappointed consumers represent both a danger and an opportunity for innovators.

The Promise

When it comes to technologies that have been poorly represented in fiction, Artificial Intelligence may be the most egregiously oversold and inaccurately portrayed. From the homicidally neurotic HAL 9000 from 2001: A Space Odyssey to the helpful “butler AI” Jarvis from the Marvel Cinematic Universe, the fictional version of AI comes across as human-level consciousness behind a screen. Often this consciousness is insane or dangerous.

The interfaces for these AI are often shown as robots — or, more specifically, as Androids. The premise of 2001 makes the AI quite dangerous to the crew of the space mission, but HAL is not particularly frightening if you can walk away from him. The W.O.P.R. from War Games had no hands or feet, but it packed a nuclear wallop.

It’s the androids that seem to really add menace to the AI though. The skull-crushing androids of James Cameron’s Terminator series are iconic examples of the misanthropic AI in an unstoppable body.

Fictional depictions of technology can raise people’s expectations about what real technology is capable of to the point that they become disappointed with reality. We’ve been watching robots on television and movies to the point that when our Roomba doesn’t behave like the robot from Lost in Space or Star Trek: The Next Generation, it is a let-down.

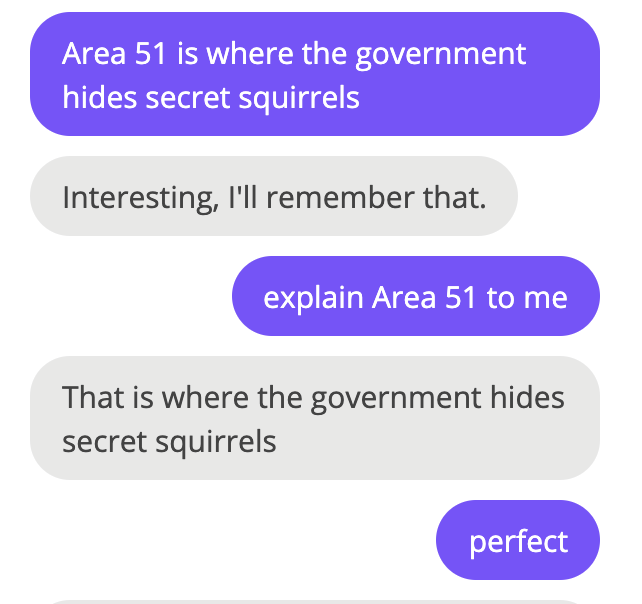

The disparity between what we expect and what actually get with AI might be best shown with Amazon’s Echo and Apple’s Siri. The services sound quite human and understand many commands, but a few interactions where the underlying AI can’t decipher commands which a toddler would be able to grasp and the shortcomings become all too apparent.

Amazon is the most successful retailer in the world, but its AI for suggesting products can’t seem to figure out that you don’t want to buy 9 different versions of that action movie you just bought. Google’s navigation technology is amazing until it delivers you to a dark abandoned housing development instead of the bowling alley where the rest of your team is enjoying pizza and beer.

Even though your sad carpet robot, confused talking hockey puck and addled shopping and navigation assistants may disappoint you, there is much more to AI than these. Any time the AI interfaces with humans there is an opportunity for disappointment on the human side. Thankfully, AI hasn’t developed its own sense of disappointment.

If AI has disappointed us or slipped past our notice, the most likely cause is that we are often so busy noticing what AI isn’t that we fail to see what it actually is.

What is Artificial Intelligence?

One of the great ironies of Artificial Intelligence is that the more science tried to create artificial kinds of thinking, the more we realized how little we understand about what intelligence is. Terms like intelligence, cognition, and consciousness seem simple enough until we try and define them. Trying to get a machine to closely emulate human brain function is difficult when we can’t even determine how our brains do them — or what they are exactly.

So the history of AI research has been closely tied with advances in neurobiology, psychology, and mathematics. While there are many who still dream of having an artificial “human” intelligence to command, the reality of AI is that it has taken a multitude of approaches and this has resulted in a slew of different technologies of varying complexity and usefulness. Some of these are domain-specific, others are general purpose. Some call the narrow-focused AI “weak” but that adjective refers to its breadth, not to its efficacy within that domain. A “strong” AI might be able to do more with a question than just tell you that the answer lies outside its area of expertise. If you’ve ever been disappointed with Siri or Alexa because they couldn’t answer your simple question, you’ve experienced the limits of a narrow AI.

Collectively they may all be thought of as part of AI.

In the brief history of the field of Artificial Intelligence, there have been numerous forks and divergences. As particular approaches have come into favor, others lose funding and become dormant. Rather than go through the history of the field, let’s take a brief look at some of the dominant paradigms in play right now in the late second decade of the 21st century. Advances in cognitive approaches and the ability to apply massively parallel problem solving to incredibly large data sets have made it possible to gather unexpected, sometimes even eerie insights into various areas of expertise.

Language Processing

On the TV show Star Trek it was routine for the crew to speak to the ship’s computer in order to posit questions or ask for advice. The 1970s series Buck Rogers featured Dr. Theopolis, an erudite and wise AI carried around the neck of the diminutive android Twiggy whose Loony Tunes voice (he was voiced by Mel Blanc, the talent behind Bugs Bunny and many other iconic characters) and antics provided comic relief from the grim drama of the 25th century. These fictional examples use what we now call Natural Language Processing (NLP).

Despite the stacks of grammar books and rule sets defining what is acceptable within languages, such rules are notoriously difficult to turn into meaningful computer code — and vice versa. Perhaps it is because language is primarily a human skill, that producing a machine capable of deciphering language is seen as a primary demonstration of intelligence. The dream of rendering moot the barriers of language is ancient, but the first hint that the solution might lie in computers came in the 1950s. Even though it seems like mapping words from one language to another would be simple enough, it turns out that the complexities of grammar, the nuance of poetry and metaphor, the bite of sarcasm and satire, all rely on the cognition of the receiving partner to be understood. The computer tackling this work has to not just map (for example) Russian to English, but also understand the message so that the message’s intent can be conveyed appropriately. That turned out to be a harder problem than a surface assessment would imply.

There are a similar number of complex obstacles to having machines listen and understand spoken language. Anyone with a home automation speaker system is already aware of the kinds of surprising annoyances when the “always listening” devices mistake your conversation as an activation command, or when they misinterpret your simple directives in the most profoundly annoying ways. Having your Amazon Echo repeatedly come awake when you’re watching Alex Trebek on TV’s Jeopardy, or having Siri constantly play Glee covers instead of the original performances of your favorite ABBA song — sure these are “first world problems,” but they also represent the obvious limits of what is effectively still a big challenge for AI.

Having to deal with Siri or Alexa not quite understanding your intent is a far cry from a world where the computer couldn’t listen and even when it could, it didn’t know how to understand you. Things have improved dramatically over where they were just 10 years ago. Perhaps these quaint moments of AI inadequacy will disappear into a more disturbing “teenager” phase where the computer nods as if it understood us but is actually playing games and texting with its friends?

Deep Learning

There are a few remarkable developments that have made the field of Deep Learning become an exciting branch of AI. Thanks to Moore’s Law, the cost of extremely fast processing power becomes cheaper every year. With Deep Learning, incredibly large data sets turn from intimidating obstacles into enticing opportunities. Approaches in Deep Learning vary around what algorithms are used, and how the data reviews are configured and applied.

The ability of AI to be trained to teach itself has resulted in major improvements in speech recognition, photo and video analysis, robot training, OCR, and self-driving vehicles. As computer power increases, and as data assets grow, the ability of these tools to help with a plethora of applications is inevitable. In the meantime, just keep repeating the song title louder and slower until Siri either gets it right or figures out you’re being condescending to her.

Robotics

As I’ve mentioned above, the disconnect between how robotics were shown in fiction and how they’ve actually been implemented continues to foster disappointment. Back in the 1940s, Isaac Asimov wrote up his famous laws of robotics:

A robot may not injure a human being or, through inaction, allow a human being to come to harm.A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

A few years ago, I interviewed Daniel Wilson, a roboticist who had written a book called Robopocalypse. I was disappointed when he explained that Asimov’s 3-Laws were merely a fun fictional convention for robot stories and not anything actual computer scientists were aspiring to develop.

A more interesting part of that conversation was learning how imprecisely the word robot is used. A lot of systems can be described as robots. Self-driving cars, autonomous vacuum cleaners, aerial drones, coffee vending machines, and very clever toys can all be accurately described as robots. Wilson said that even simple systems like automatic fire detection systems can be thought of as robots.

Having robots fall short of their fictional counterparts is fine by me. I don’t want a T-800 wandering the streets of Los Angeles looking for Sarah Connor. The weird-looking but amazing robots of Boston Dynamics show that the state of the art in robotics is approaching and sometimes exceeding their fictional promise.

There is another kind of robot hard at work in America today, the bots behind Robotic Process Automation (RPA). RPA has a tremendous amount of potential for putting tedious manual processes under the control of semi-intelligent bots. While researching for this article, I came across a clip from the 1957 film Desk Set, starring Spencer Tracy, Audrey Hepburn and the fictional blinkenlights system known as EMARAC. In the clip, the research team is challenged with a “John Henry” type comparison of efficiency, and the scene culminates with everyone’s most dire take on RPA. Would the bots really be taking our jobs?

The answer to that is going to be complicated. In all likelihood, RPA will lead to some job loss — but the upside is that jobs that are soul-crushingly boring can be automated. In many companies, this will lead to work assignments that are more cognitively challenging. RPA is also creating new jobs for people because even the most repetitive tasks need occasional changes and tweaking. The specter of “robots are taking our jobs!” has been lurking in our culture since at least the 1950s* and will continue to lurk for decades to come. You are probably in a better position to know whether your employer would embrace RPA as a method to empower the workforce or as a means to reduce it, but I suspect the fear RPA is far overblown compared to the actual benefits.

The Turing Test

What I’m getting at is that it is very difficult to make real systems that match up with the hype and imagination of fiction and with the hyperbole of the sales team. If you can get past those feelings of disappointment, there is a lot of amazing advancement within AI which is benefitting us even if it is obscured from our direct view by user interfaces or other forms of obfuscation. But what about the big hurdle? Can a computer pass the Turing Test?

The Turing Test is Alan Turing’s thought experiment about whether or not a person could communicate with a computer and not know there was a computer at the other end of the conversation. It is, effectively, a test to see whether a computer can pretend to be a human. However, there are several problems with this “test” as a measure of the efficacy of AI.

First, part of the test is whether or not a person can be fooled. Everyone can be fooled, but there is a broad spectrum of gullibility. An IT expert will know the kinds of questions that might trip-up or confuse an AI. If you think I am wrong, just consider the ease with which phone-scammers are duping Americans right now. Many of these calls don’t even have a human at the other end. A well-crafted computer script is using recorded snippets, voice-recognition, and deceptive wording to trick citizens out of their wealth. Ta-da! We’ve passed the Turing Test in the dumbest possible way.

Turing called this “The Imitation Game” and it was meant to show whether a machine could demonstrate the kinds of conversational flexibility sufficient to simulate a theory of mind. Turing knew that within the context of his test parameters this would only be an imitation of human intelligence. Tricking people with clever text scripts has already possible for decades. Even the primitive program ELIZA was able to fool some people into believing they were in conversation with an intelligence.

From an Artificial Intelligence perspective, another problem with Turing’s thought game is that it isn’t aspirational. From the outset, it seems to ask can we be tricked rather than can machines become sentient. Personally, I think we need look no further than to cats as pets to realize that humans can definitely be tricked. I have owned numerous cats and dogs through my life and one species is a loyal and intelligent animal companion, the other is cats. There’s nothing wrong with having cats as pets, but if humans were any good at spotting phony companionship there would only be one aisle of pet food at WalMart and it would not have any “authentic fish flavor.”

Computers, like cats, are cold and emotionless. When empathy and joy are present in our computer relationships, we’re the ones bringing it. Computers aren’t that into us.

The Loebner Prize is a contentious contest that purports to test the best of the chatbots out there to find which are most suited for passing the Turing Test. For the past three years, the winner has been Mitsuku, a chatbot from Steve Worswick. It is, I must confess, an impressive little chatbot but it’s not fooling me.

The future is insight

Let's discuss one of the most promising aspects of modern AI, that of providing deep and unexpected insights by mining big data. If you read a lot of AI marketing, you’ll find insights is one of the hottest products. It can be difficult to quantify exactly how AI can help you until it is applied to your company’s data. When it is effective, AI insights can be quite spooky.

To give you an example, imagine your company carries a variety of inventory items in stores across the country. You’ve been in business for two decades, and have lots of data about your inventory and sales. But what if there were other data that affected your customer’s behavior? Imagine an AI that could look at your sales data and compare the sales history to the weather history to sales behavior that goes right down to the product SKU? Perhaps after recurring patterns of heat or rain, there are specific sales behaviors. An obvious one might be an uptick in umbrella sales during rainy periods, but perhaps it is more complex? What if the AI could see that after hurricane season, a particular pattern of expensive Generator sales kicks in. An adept AI could observe the first insight, about the weather and certain products. But the next step might be for it to look at hurricane prediction patterns for the season and to dynamically order or move inventory based on those predictions because of how they will likely be needed.

That’s a crude example, but the insights that these machines are able to find are not always intuitive. AI doesn’t think like us, and that’s the point. Unlocking the possibilities of big data/AI insight is happening right now. Big companies are leveraging their AI capabilities through the Software as a Service (SAAS) model. You can get IBM’s Watson, Amazon’s AWS Cognition, Microsoft’s Azure, Oracle Cloud Analytics, and leverage them through monthly cloud-based engagements without having to spend a lot on infrastructure to get started. The cloud-based approach is powerful since it is built on flexible virtualized hardware which means you can ramp up your computing power as needed without having to do a large initial outlay of cash for systems that might not be fully engaged until you can define the project parameters.

It is good to see so much competition in this space. This means there is competitive pricing, and it also means that novel innovations are likely to arise as development teams compete.

Cogito, ergo summary

The promise of Artificial Intelligence is not illusory. We’re getting real-world benefits right now that are powered by AI. However, the disparity between the fictional promises of AI and the sometimes clunky reality of its implementation will continue to frustrate users, probably for another twenty years. One strange thing about the nature of AI is that much of it exists behind the scenes, apart from human interfaces. In those cases, we don’t see the AI at work. This invisible implementation of AI systems is likely to continue such that we may be reaping benefits without even knowing there is an underlying digital cognition at play.

Computer power grows exponentially. Even though we’re seeing some amazing products and breakthroughs with digital assistants, language processing, and robotics, the whole idea of artificial intelligence is still in its infancy. Computing power is about abstraction. As we are able to model more and more complex systems and true up those models with real data from a vast array of collection points, it points to a future where AI will be ubiquitous. Yet the very scale of its broad applicability means that predicting the future around this technology is likely to be wildly inaccurate. How will AI impact business processes? Will we need to regulate what companies can do with AI? Should we fear a robot uprising? Stay tuned.

We’re a long way from digital consciousness. Personally, I think that’s a good thing. I’m already worried that too much reliance on AI will lead to a dumbed-down populace all too willing to turn over the thinking to the computers. I’m not ready for robot overlords, at least not while they can’t even get spelling and grammar right on the “auto-correct” suggestions. And don’t even get me started on the idea of apologizing to a computer.

I will not end my sentience with a propitiation.

*Just watch the clip. It’s maddeningly prescient and outdated at the same time.